A few weeks ago I stumbled on this article "A co-Relational Model of Data for Large Shared Data Banks" ("coRel" hereon) in the on-line version of ACM Queue (this is the Association of Computing Machinery's magazine). The article was authored by two employees (Erik Meijer and Gavin Bierman) of Microsoft.

The authors summarize their thesis as thus: "Contrary to popular belief, SQL and noSQL are really just two sides of the same coin." The problem with this article, is that the author's are asking the entirely wrong the question. They are looking at the world with a very narrow and single minded perspective of data - a perspective which was conventional wisdom up until the 1970s (academically), and the 1980s (commercially).

In technical terms, the authors are basically asking this question: If it is possible to implement a Network Model using a Key/Value [NoSQL] database, and it is possible to implement a Network Model using a Relational database, then can the two be queried and modified by the same declarative language? The answer to this question is a resounding yes. Unfortunately, the Network Model is not perspective neutral, which is why the Relational Model was invented.

Backing up a big, allow me to explain what I mean. The Relational Model takes a perspective neutral approach, and regards all entities, no matter how insignificant they may seem, as "first class citizens". Other data models such as the Network Model and Hierarchical Model lock the data into a given perspective and make certain entities "first class citizens" and others "second class citizens". For example, if you have ever organized your inbox e-mails into folders, or documents on your computer into folders, you have probably chosen a certain hierarchy. Maybe you organized your folders by customer, so that way when a customer asks a question, you can quickly go to the right folder and find all the necessary information. But what if a project manager comes to you and starts asking questions about a particular project, and that project cuts across customers? What normally happens here is you start searching through each customer folder looking for e-mails or documents that pertain to the project. Most people will just copy (or create short-cuts) to those project documents to another folder. We've all been through these searching and sorting exercises. When software developers are confronted with the same problem, they pretty much do the same thing - they reorganize (or refactor) the data.

However, if the data were Normalized (i.e. modeled relationally) to begin with, no such reorganization would be necessary.

The author's (and the majority of software developer's) myopia is apparent in their view of history, the example data model they provide, and even in computer science theory.

Let's talk about history first. coRel has this to say:

Codd's relational model and SQL allowed implementations from different vendors to be (near) perfect substitutes, and hence provided the conditions for perfect competition. Standardizing on the relational model and SQL created a secondary network effect around complementary producers such as educators, tool vendors, consultants, etc., all targeting the same underlying mathematical principles. Differences between actual relational database implementations and SQL dialects became to a large extent irrelevant.

While it's true that standardization around SQL led to wide adoption, such standardization had already emerged prior to the introduction of the relational model. Namely, CODASYL (the same body that created COBOL) developed a standard around the aforementioned Network Model, often referred to as the Data Base Task Group (DBTG). Much of what you see in modern SQL standards actually comes from this standard - in particular the separation of DDL (data definition language) from DML (data manipulation language). However CODASYL vendors (and there were a lot of them), were blindsided by Codd's relational model.

Interestingly, Codd's original language was not SQL, but rather Alpha. Also, the first two major RDBMS vendors had competing standards: Ingress used a language called Quel; and Oracle and IBM used SQL. But because Ingres was always based on the relational model, it was able to simply slap on support for SQL. Ingress lives on to this day in the form of PostgreSQL. The other non-RDBMS vendors also live on to this day, but tend to serve particular niches (e.g. IBM's IMS is still heavily used in banking). There was nothing inherently special about SQL, and other relational languages are still around and continue to be invented. What is special is the underlying Relational Model.

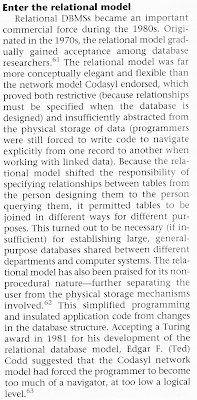

For a better explanation of why the Relational Model entered the marketplace, here's a passage I scanned in from the article "The Commercialization of Database Management Systems, 1969-1983" found in the IEEE Annals of the History of Computer, Volume 31, Number 4, October-December 2009

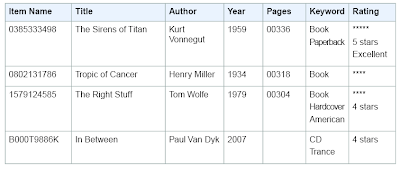

I am now going to talk about the sample data model used by coRel. The example is based on an example taken from Amazon's SimpleDB. Here is what the original data looks like, as described by Amazon:

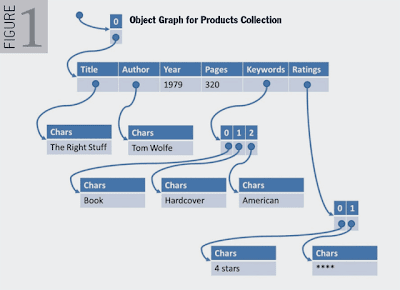

Here is how the authors model this using an object model (essentially a Network Model):

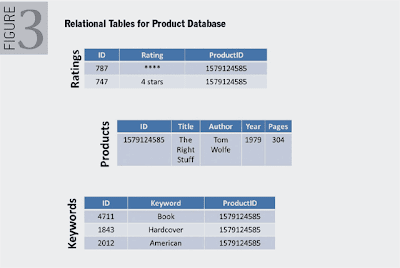

Here is how the authors model this using a Relational Model:

If you're an experienced relational modeller, you will observe that the model is not in BCNF (Boyce-Codd Normal Form), as the Ratings and Keywords entities two of the entities have overlapping candidate keys.

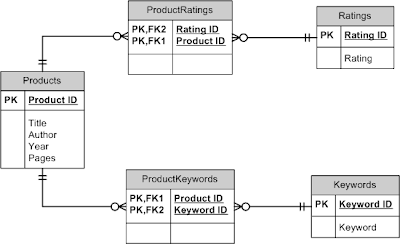

Here is what the data model should look like in BCNF:

From the perspective of the Product Catalog application, this change seems somewhat academic. However, if we extend our normalized data model to include Tweets retrieved via Twitter keyword searches - which is important from the perspective of a marketer - things get more interesting. Here's the updated model:

What is important to note in this normalized model is that all entities are "first class citizens". As an Product Catalog application developer, I can ask questions or make changes to Keywords and Products, without having to involve Tweets. Or, as a marketer I can ask questions about Tweets and Products without having to involve Products.

When data is seen in a larger context with many different perspective, the relational model makes sense. While it may be more efficient to model data for a particular perspective (i.e. the product catalog application) using a Network or Object model, the same model can be very inefficient and lead to anomalies and contradictions in the data. This point is lost on many developers, since most only deal with a single perspective of the data. The following paragraph in coRel makes this very clear:

Summarizing what we have learned so far, we see that in order to use a relational database, starting with a natural hierarchical object model, the designer needs to normalize the data model into multiple types that no longer reflect the original intent; the application developer must reencode the original hierarchical structure by decorating the normalized data with extra metadata; and, finally, the database implementer has to speed up queries over the normalized data by building indexes that essentially re-create the original nested structure of the data as well.

See the problem? There is rarely such thing 'natural' hierarchy. Perspective is everything, and depending on how we view an ontology, we can ascribe many different hierarchies.

Now, you may be wondering if it is possible to represent a relational model in a NoSQL database, such as a key/value store. The answer is: sort of. While it is possible to recreate the structure of the relational model, it is not possible to centralize the integrity of the relational model. This is not a trivial point. Referential integrity (and other forms of integrity, such as uniqueness, nullability, and value domain constraints) are what ensure the correctness of ad hoc queries. When such constraints are removed, it is up to the application developer to examine the underlying data and perform numerous tests to ensure its integrity. The end result is poor data integrity and poor data quality. I can speak quite frankly on this last point as I see the difference between poorly constrained data models and well constrained data models, all the time. Just like the second law of thermodynamics, when unconstrained, over time data entropy tends to infinity.

In other words, the relational model allows us to manage information as a separate concern. You might even say this is the whole point of the relational model.

I now want to move on to the theoretical aspects of the paper, in particular the question of compositionality. CoRel's authors define compositionality as: "the ability to arbitrarily to combine complex values from simpler values without falling outside the system" they go on to say

SQL is rife with noncompositional features. For example, the semantics of NULL is a big mess: why does adding the number 13 to a NULL value, 13+NULL, return NULL, but summing the same two values, SUM(13, NULL), returns 13?

A more precise definition of compositionality comes from Wikipedia which states "An important aspect of denotional semantics of programming languages is compositionality, by which the denotion of a program is constructed from denotions of its parts."

SQL guarantees compositionality since it doesn't have any side effects. Contrast this with most concurrent programming languages (e.g. Java, C#, Python), and they do not guarantee compositionality since it's possible to write modules which impact the other modules.

But I don't think the authors were thinking along these lines. They're really arguing that SQL is inconsistent and point out the example with the NULLs.

The reason why there is this perception of non-compositionality is that sets and tuples are treated as primitives. You cannot make an aggregate function out of tuple functions, and you cannot make a scalar function out of aggregate functions.

NULLs are controversial to this day, and Codd even wanted to take things a step further, and distinguish between "unknown but applicable" and "unknown but inapplicable".

Codd's basic argument for the inclusion of NULLs (and three-value logic) can be summarized as thus: In the real world, handling unknown values is inherently complex. Instead of thrusting the complexity back to the user, the RDBMS should handle unknowns "correctly" - in so far as the behavior correctly models real world behavior. This can result in NULLs being counter-intuitive, but just because something is counter-intuitive it doesn't mean its wrong (think flat earth intuition, round earth reality).

For example, let's say you are hotel manager and you want to know the average number of days a guest stayed for. Assuming you have a database where front reception can log check-in and check-out times. Obviously when you haven't checked out, the check out time is unknown (or doesn't exist), so that attribute would be NULL. When taking the average of checkin [minus] checkout, you will only be including rows where both the check-in and check-out times are known, since when tuple contains an unknown element [unless stated otherwise] the tuple as a whole cannot be known, and should be eliminated from the set. This is what you want. Putting it in a SQL query, it would look like this:

SELECT

AVG(DATEDIFF(CheckOutTime, CheckInTime))

FROM

BOOKINGS

As you can see, it's a very simple query to write and validate, and reflects the correct handling of NULLs. Quite the opposite of a "big mess".

But the whole question of compositionality is also missing the point with SQL. SQL is not a computational language - it's a data retrieval language, based on the relational model. When we're talking about data, compositionality is not our main concern - normalization is. Data which is not normalized, is like a program which is non-compositional. Nasty side effects can and will arise.

---

The funny thing about the relational model is that it is predicated on Relational Algebra which is completely orthogonal to the Universal Turing Machine. The former is about logic, and the latter is about flow. They are not in competition.

But when I hear people say things like "it's about time somebody built a better database than those stupid RDBMSs", it's akin to saying "it's about time somebody build a better Universal Turing Machine". Makes no sense really.

Before I conclude this post, I want to share with you an excerpt from Joe Celko's "Thinking In Sets" which is very telling:

Many years ago, the INCITS H2 Database Standards Committee (née ANSI X3H2 Database Standards Committee) had a meeting in Rapid City, South Dakota. We had Mount Rushmore and Bjarne Stoustrup as special attractions. Mr. Stoustrup did his slide show with overhead transparencies (yes, this was before PowerPoint was ubiquitous!) about Bell Labs inventing C++ and OO programming, and we got to ask questions.

One of the questions was how we should put OO features into the working model of the next version of the SQL standard, which was known as SQL3 internally. His answer was that Bell Labs, with all their talent, had tried four different approaches to this problem and they came to the conclusion that it should not be done. OO was great for programming but deadly for data.

Summing up. While I am being critical of the coRel paper, there was clearly a lot of thought that went into it, and the authors come across as being intelligent and having a good pedigree. My point is that there is an institutional bias towards application-centric data modeling, which comes at the expense of perspective neutral data modeling - i.e. the Relational Model.

It has been my experience that this bias has led to a great deal of friction between software or application architects, and data architects. Much to the frustration of both.

My greatest hope is that by educating students at an earlier age, this deep rooted bias can be avoided. This, I will point out is a long running project of mine. A blog for another day.